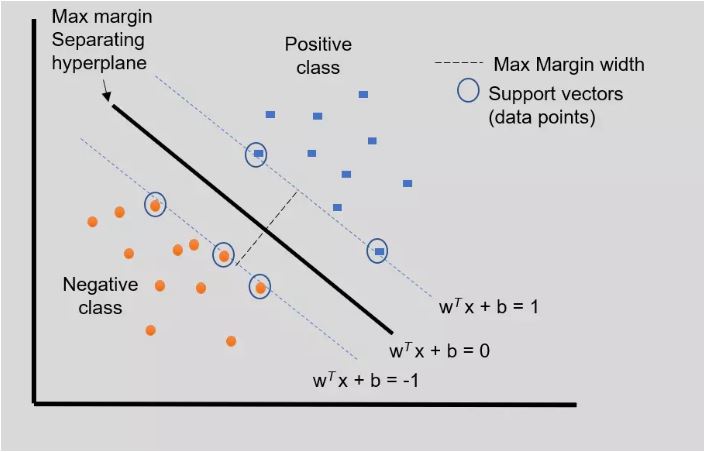

You might use a support vector machine (SVM) when your data has exactly two classes. An SVM classifies data by finding the best hyperplane that separates all data points of one class from those of the other class (the best hyperplane for an SVM is the one with the largest margin between the two classes).

You can use an SVM with more than two classes, in which case the model will create a set of binary classification subproblems (with one SVM learner for each subproblem). There are a couple of strong advantages of using an SVM. First, it is extremely accurate and tends not to overfit data. Second, linear support vector machines are relatively easy to interpret. Because SVM models are very fast, once your model has been trained you can discard the training data if you have limited memory available. It also tends to handle complex, nonlinear classification very well by utilizing a technique called the “kernel trick.”

However, SVMs need to be trained and tuned up front, so you need to invest time in the model before you can begin to use it. Also, its speed is heavily impacted if you are using the model with more than two classes.

Sources