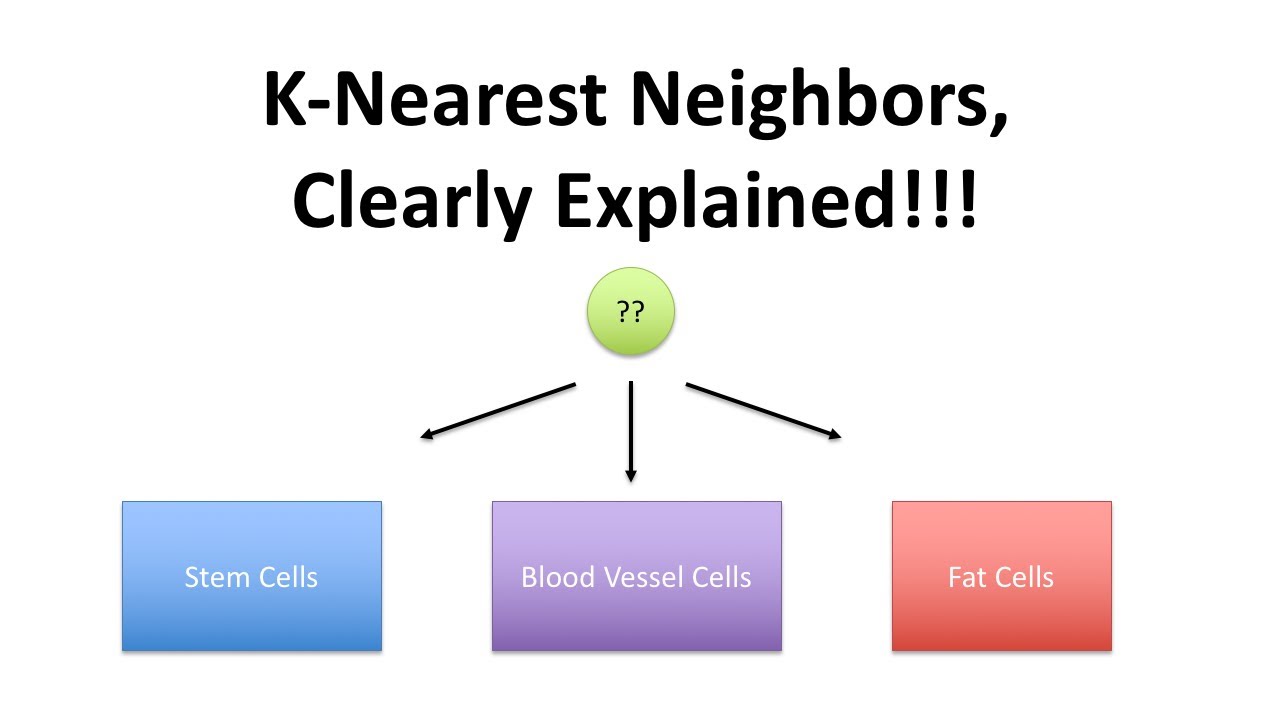

Categorizing data points based on their distance to other points in a training data set can be a simple yet effective way of classifying data. k-nearest neighbor (KNN) is the “guilty by association” algorithm. KNN is an instance-based lazy learner, which means there’s no real training phase.

You load the training data into the model and let it sit until you actually want to start using the classifier. When you have a new query instance, the KNN model looks for the specified k number of nearest neighbors; so if k is 5, then you find the class of five nearest neighbors. If you are looking to apply a label or class, the model takes a vote to see where it should be classed.

Although the training time of KNN is short, actual query time (and storage space) might be longer than that of other models. This is especially true as the number of data points grows because you’re keeping all the training data, not just an algorithm.

The greatest drawback to this method is that it can be fooled by irrelevant attributes that obscure important attributes. Other models such as decision trees are better able to ignore these distractions. There are ways to correct for this issue, such as applying weights to your data, so you’ll need to use your judgment when deciding which model to use.

Sources